Wait…Nano Banana?

Yes, that’s the actual nickname. Google’s DeepMind just released Gemini 2.5 Flash Image, and while the official name sounds slick, everyone’s calling it Nano Banana. You’ll find it in Google AI Studio, via the Gemini API, and for enterprises in Vertex AI.

So what’s the fuss? Nano Banana can generate, edit, and remix images with natural-language prompts. It’s fast, cheap, and surprisingly good at keeping characters consistent across multiple scenes. Think of it like Photoshop meets improv comedy – you give it some prompts, it tries. Most times you get gold, sometimes you get a trainwreck.

So, hang on tight!

The Problem with Image Creation

Design takes time. Product shots require reshoots. Marketing teams wait on designers for every tweak. Educators sketch diagrams and wish they looked better. And those of us without Photoshop skills? We’re usually stuck with Canva templates or clipart.

That’s why AI image generation has been so buzzy. But until now, most models were fun for playing, not precise enough for serious work.

Not too long ago I wrote about ChatGPT’s improved image generation (still exceptional for text to image…especially when you want to add words to the image!). However, it really struggled with image editing or using images as foundations for new ideas.

Meet Gemini 2.5 Flash Image

Launched last week, Gemini 2.5 Flash Image (it’s project name was Nano Banana and the public is refusing to let it go!) is Google DeepMind’s new image model.

With it you can:

- Edit with text prompts (“add a bookshelf,” “change the shirt to blue,” “remove the background”)

- Fuse multiple photos into one.

- Keep characters consistent across different scenes

- Leverage world knowledge to interpret sketches or objects more accurately

It’s available in the Google space but, like other similar models, it is being integrated and made available in a variety of other tools including Adobe Firefly (be still my heart! Adobe really needed this!) and Fal.ai (more on this later).

My Week of Going Bananas

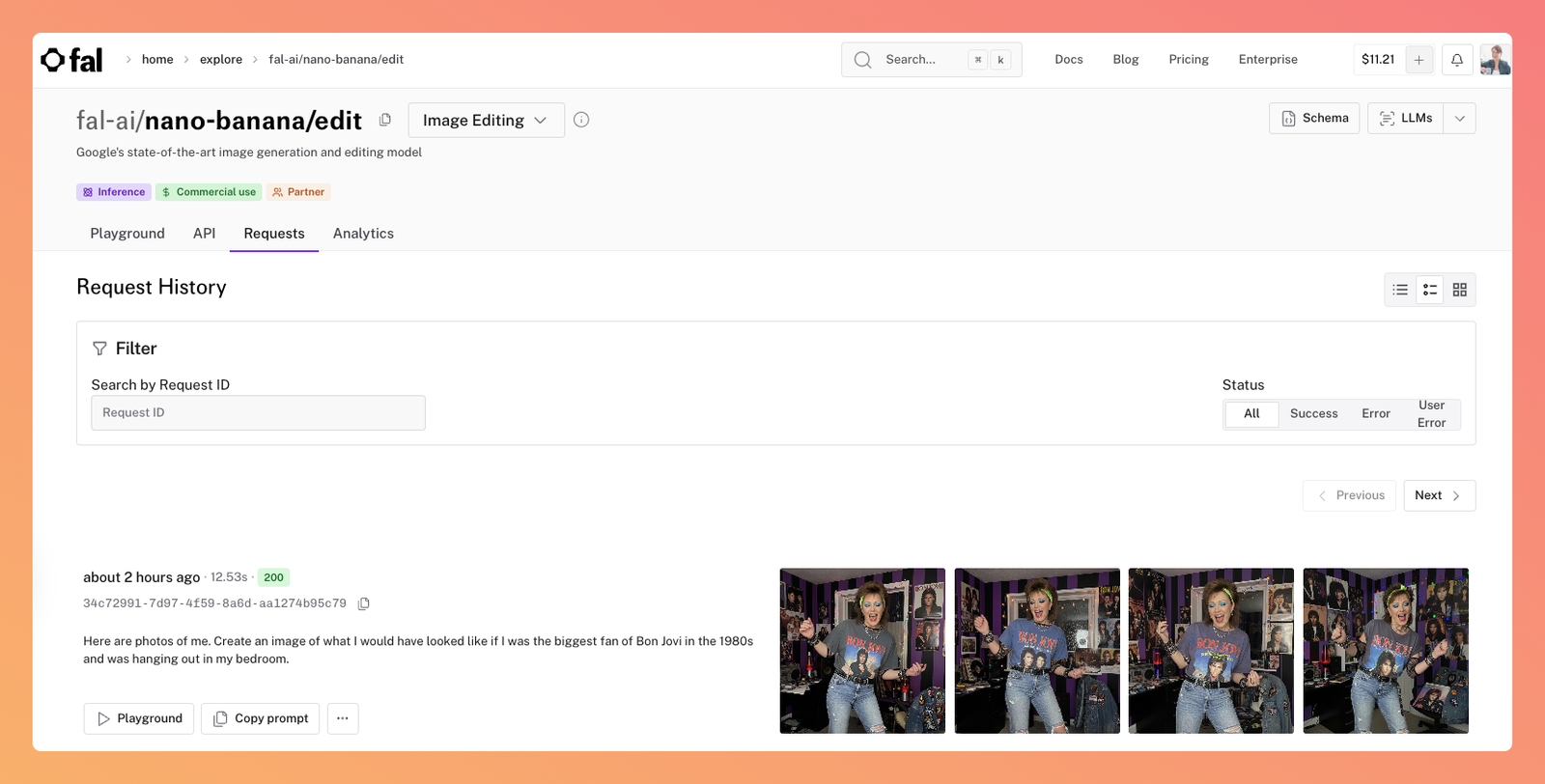

I spent the week running Nano Banana through its paces. The results? Equal parts wow, useful, and ridiculous.

Here is a sample of what I got:

It's Got Some Pros and Cons

There are really cool benefits of this model including:

- Speed & cost: Pennies per image, seconds per edit.

- Consistency: Keeps faces, products, and characters recognizable across prompts.

- Fusion: Combine multiple images into one coherent scene.

Built-in protection: Blocks risky edits (like child images) and avoids unsafe use cases. That’s a win for responsible AI.

Watermarking: Every output is tagged with SynthID, allowing us to identify what’s AI-generated. That’s essential in a world of deepfakes, and I’m excited for this to be surfaced in more places.

But it’s not perfect (nothing ever is, right?!)

Artifacts: Missing limbs, mirrored water splashes, uncanny details.

Overzealous filters: About 40% of my photos were blocked as “child images.” Good guardrail… but unless Botox works miracles, I’m not passing for 12.

Text rendering: Still unreliable—expect gibberish more than usable typography.

- Predictive: Remember that it’s a predictive generation tool so it can only create what it has “seen.”

- Quality: Image resolution and detail are limited, as is control over image ratio. This is part of why I’ll be excited to test this model when it’s used by Adobe Firefly.

While you can use Google Studio to access this model, I’d really encourage you to check out Fal.ai. This is my go-to platform for image and video creation because it’s pay-per-use (someone will surely stage an intervention if I get ANOTHER subscription!). Yes, it says it’s for developers, but it’s pretty straightforward to use for image generation, and I have total faith in you!

Should Businesses Care?

This is wild technology. It’s not just AI making pretty pictures; it’s AI inching toward usable creative control. The implications for marketing, e-commerce, education, and design are enormous.

Marketing: Campaign visuals without expensive reshoots.

E-commerce: Product variants, textures, and backgrounds on demand.

Education: Whiteboard sketches turned into neat diagrams.

Content & storytelling: Consistent characters across comics, explainer videos, or training content.

This absolutely could replace some of the work that human designers do. It will certainly speed up drafts, experiments, and iterations. But, it is also making design more accessible to a huge group of people and, perhaps creating a new way of working and creating.

Having said that, I’ve worked with many incredible designers and their real skills shone in communication – interviewing me, taking vague goals or ideas and turning them into marketing gold. I don’t think their creativity, vision and collaboration won’t be replaced anytime soon!

Critical Thinking Still Required!

Watermarks are great. Filters are important. But neither will save you if you take every image at face value.

Convincing ≠ correct. The swimming Vizsla looked realistic until you noticed the water splashes were mirrored. My landmark diagram looked fine until London Bridge ended up on dry land.

The tech is improving fast, but your judgment remains the most important editing tool.

"Critical thinking is the safeguard AI can’t provide."

Try It This Week

Let a Picture Paint a 1,000 Words

Here are four quick experiments to see Nano Banana’s strengths (and quirks) for yourself:

- Restore a memory: Upload an old family photo and ask it to colorize or sharpen it. Pay attention to how well it handles detail.

- Mock up a change: Take a picture of your office or living room and prompt it to “add a bookshelf” or “repaint the walls blue.” See if the AI matches your vision.

- Swap a background: Drop yourself into a new setting: “me in a coffee shop” or “me on stage at a conference.” Great test of realism.

- Go from scribbles to scrumptious: Sketch something simple (like a flowchart or diagram) and have it clean it up into a polished version.

Pick one workflow—marketing visuals, product images, or internal training—and test Nano Banana. Note what it gets right, where it fumbles, and what questions it raises about trust, oversight, and quality.

If you’re not sure where to start, that’s where I come in. I help leaders and teams experiment strategically, separate hype from value, and integrate AI tools responsibly into their work. If you think that your conference or group would benefit from that, you know where to find me!